Shorting Oracle

With Option Spreads, Part One

Our esteemed colleagues over at the Fractal Computing Substack had an article today that says that

Oracle is obsolete, perhaps the biggest short sale in a generation, we are going to prove it to you here.

Are Fractal Computing’s claim’s worthy of attention? After all, this firm claims that its software is 10,000 to 1 million times faster than legacy relational database technology. If you, the reader, are not technical, how do you evaluate these claims?

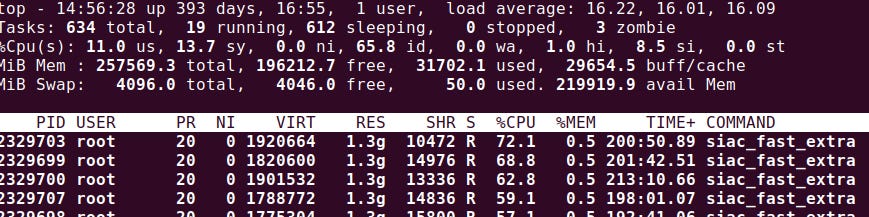

One way to do this is to ask someone with a technical background. Your author fits the bill for this, and although I haven’t done anything with Fractal, or even looked at their software, I’ve done a lot work in their area of specialty which is in low latency computing. From what I can see, the claims made by the Fractal Team are quite conservative, my own benchmarks in production projects show improvements by over 10 million times, not just the one million times improvement mentioned in the Fractal Substack post. If you have access to a UNIX, Linux or MacOS terminal, you can look at the Input / Output (i/o) usage on your machine right now, using the top command. To run it, simply open a terminal and type top. You should see something like this:

Above is the top output for the SpreadHunter Real Time Search compute node, which is processing the stream of options data at over 100 million packets per second and applying algorithmic trading rules on each tick.

In this example, the Cpu(s) stats show 11.0 for the us statistic: this refers to the CPU cycles consumed by the user’s application. This means that the data handlers and trade algorithms are consuming 11% of the total CPU capacity. The sy statistic refers to system operations including memory allocation and i/o management. The wa statistic refers to the wait time spent on i/o operations. In this example, there is zero wait time for i/o, something I spent a lot of time on, both in tuning the hardware system (computer, network cards, and drivers) along with optimizing the application code to reduce this value to zero. Without tuning, the system stalls in a millisecond or two under the heavy load. hi and si refer to hardware and software interupts respectively. These are also important areas of i/o to look at, the hardware interrupts are for the most part generated by the network hardware and the software interrupts are generated by the network driver code.

If you are a recent Computer Science grad going into an interview, you are guaranteed to be asked lots of questions about number of instructions in a given algorithm, and probably zero questions about i/o. Hint: the key phrase to study is Big O Notation. Once you get the job, or start your own company, you will quickly learn that the total number of instructions has very little impact on how fast your code runs. Instead, i/o operations — memory reads and writes, disk reads and writes, and network reads and writes — will dominate the time consumed by your application. The tighter you can pack the memory/disk/network data into contiguous blocks that the hardware can inhale in one gulp, the faster your code will run. Big O Notation will often have negligible impact on runtime performance, on modern processors at least. In the real world, your fastest code will probably sacrifice extra instructions in order to minimize i/o overload.

So why is Oracle a short? Certainly not because their software is a million times slower. Oracle and all of its Relational Database competitors have been a million times slower since the 1990s and none of their customers cared. Unless of course they had a North America General Ledger application that took more than 24 hours to run using a Relational Database and they needed the job to finish running before the Bank Branches opened in the morning. Whenever this happened, they would contact specialized consultants (like me) and we would speed up the application so that it ran in 15-30 seconds running on 1990s hardware. After a few years, all of the performance critical sections were optimized and for the rest of the enterprise, the 1 million times slower apps were just fine.

A handful of vendors in the 1990s took on Oracle, DB2, Sybase and other leading databases with a technology called the Object Oriented Database. Not only were these Object Oriented Databases a lot faster than Relational Databases, their sophisticated internal logic models matched business requirements a lot better than the clumsy row+column approach of the Relational Databases. The two biggest players were ObjectStore and Versant and not surprisingly, both firms went bust before the decade of the 1990s were over. I just checked out the latest batch of open source object DBs but was underwhelmed, to put it mildly. If any readers are interested, mention this in the comments and I’ll send you a C++ Object Database I wrote a while back to test out.

The reason Oracle is threatened now is that they over extended their position in building AI Data Centers. Not only can they lose lots of money on the data centers, but if anything spills over and impacts their credit score, this calls into question their ability to support their legacy customers, many of whom are extremely conservative and do now want to have their mission critical applications supported by a vendor with a Junk Credit Rating. Conservative Clients want a High Cost, Low Risk solution. If Oracle becomes a High Cost, High Risk Solution all bets are off.

Our next post will look at trades to exploit this situation.

******************************************************************

Disclaimer : All Content on the Nuclear Option Substack is for Education and Information Purposes only. It is not a solicitation or recommendation to buy or sell any security. Before trading, consult with your Professional Financial Advisor and read the booklet Characteristics and Risks of Standardized Options Contracts, available from the Options Clearing Corporation.

Excellnt angle on the credit risk spillover. The latency optimization story is familiar territory for me, I've seen enterprise deployments where teh I/O bottleneck was easily 100x bigger constraint than compute cycles. The real insight here isnt that Oracle's tech is outdated, its that their conservative client base cant tolerate the credit rating hit if datacenter bets go sideways. In my experience, once these clients start evaluating alternatives, the switching costs drop way fastre than most assume.